Recruiting Systems the AI way: Deep Neural Network for Extracting Information from Job Descriptions

Deep Neural Network to extract Information from job descriptions.

Recruiting Software is evolving rapidly everyday and this tools is even became more complex and more intelligent thanks to the massive information that presents on the internet.

Let’s take a deeper look from Marketing point of view

indeed by 2017 claimed they had over 100 millions resume. which makes this platform became one of the biggest hires in US in 2017. other platforms like linkedin, glassdoor and careerbuilder is becoming popular too as it becomes the second biggest sources of external hires in US.

We have similar platforms in MENA like Wuzzuf, Bayet, Forasna and Tanqeeb.

Since AI & Automation tools will be a top recruiting software, in this post we are going to discuss how Deep Neural Network is used to build AI & Automation tool to extract information from massive data and our scope will be Job Descriptions posted by Employers or HR Facility.

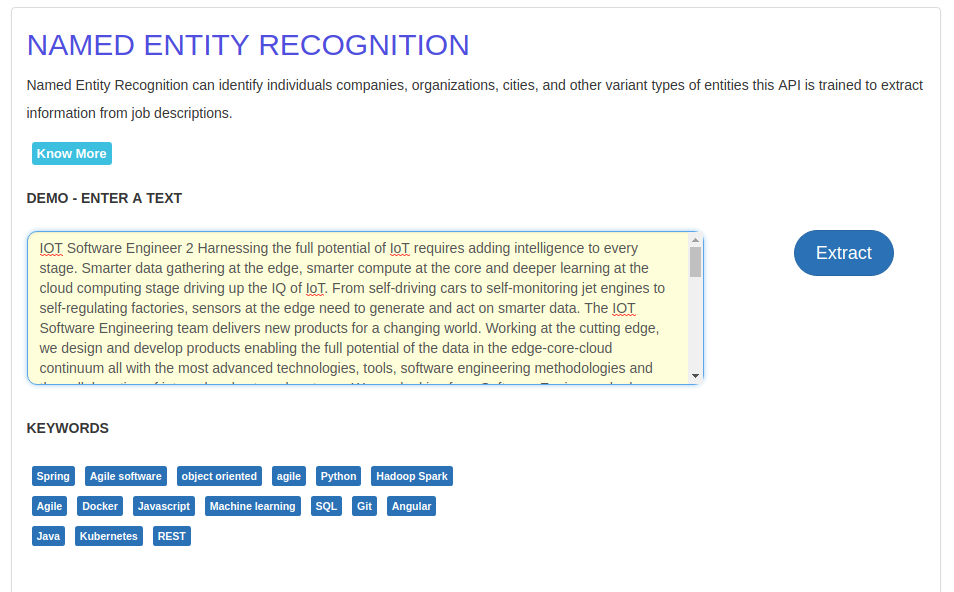

Named Entity Recognition

Named Entity Recognition is the process of locating and classifying named entities in text into predicted categories such as names of persons, locations and organizations, .. etc, in some research literature it may be called sequence labeling.

So, in this post we are building named entity recognition model to classify skills required in a job description paragraph.

Skills:

==========================

Soft-Skills i.e: "customer-service, vervbal communnications skills"

Technical-Skills i.e: "Java, HTTP, networking, LINUX/UNIX, C++, C#, AngularJs, javascript, bootstrap, .. etc"

Dataset

I had been searching for a while on an annotated database since our task (named entity recognition) is supervised learning approach so it is required the entities classes should be pre-defined. I scrapped from indeed and careerbuilder over 20k job posts.

still no annotated data, in this case i built simple command line annotation tools along with lookup table to semi automate the process and save time.

IOB Tagging system is followed.

I-TAG: Inside the chnuk

B-TAG: Beginning of the chunck

O: Outside the chunk

Used TAGS

B-TECH: Technical Skill (Beginning)

I-TECH: Technical skill (Inside)

B-SOFT: Soft Skill (Beginning)

I-SOFT: Soft Skill (Inside)

B-CERT: Certification (Beginning)

I-CERT: Certification (Inside)

B-YEXP: Years of Experience (Beginning)

I-YEXP: Years of Experience (Inside)

There is public dataset available on kaggle which is useful for anyone want to get started with and i am also impressed that wuzzuf had published corpus on kaggle too

- WUZZUF

- Annotated Corpus for Named Entity Recognition

- Indeed Resumes

- ONET Organization for research purposes

Tools Belt

keras

pandas

matplotlib

sklearn

pip install keras

pip install pandas

pip install matplotlib

pip install sklearn

# import dependencies

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import random

import string

import pickle

from sklearn.base import BaseEstimator, TransformerMixin

from sklearn.metrics import classification_report

from sklearn.model_selection import train_test_split

from sklearn.metrics import classification_report

from keras.preprocessing.sequence import pad_sequences

from keras.utils import to_categorical

from keras.models import Model, Input

from keras.layers import LSTM, Embedding, Dense, TimeDistributed, Dropout, Bidirectional

from keras_contrib.layers import CRF

Let's read some unstructured data in json format then construct dataframe accordingly

## Explore ONET Organization Dataset

def read_from_multiple(files):

"""read data from multiple files {json files} and parse each one then merge

to construct single dataframe.

"""

data = []

json_objects = []

for f in files:

data_path = os.path.join('../data', f)

with open(data_path) as f:

for line in f.readlines():

data.append(line)

json_objects.append(json.loads(line))

return json_objects

files = [

'openjobs-jobpostings.jan-2017.json',

'openjobs-jobpostings.mar-2016.json',

'openjobs-jobpostings.oct-2016.json'

]

## parsed json files

data = read_from_multiple(files)

## dataframe construction

df = pd.DataFrame(data)

## select columns

df = df[['title', 'normalizedTitle', 'employmentType', 'jobDescription']]

## discard some data

df = df[df['employmentType'].str.len()!=0]

## remove the list on each row so to be hashable in pandas operations

df.employmentType = df.employmentType.apply(lambda x: x[0])

SENT WORD TAG

437649 1084 Web O

896 1 business O

682842 1672 managers O

408038 1001 Creo B-TECH

571208 1430 our O

720411 1758 their O

36027 79 company O

390337 953 Ruby B-TECH

548633 1370 mix O

584272 1463 care O

148564 335 roles  O

559345 1398 mentoring O

Let’s write a class that we are going to use to get the full sentence from pandas tabular format

class Tokens2Sent:

"""

Convert tabular format of tokens to full sentences

"""

def __init__(self, data):

self.n_sent = 0

self.data = data

self.data['SENT'] = data['SENT'].apply(lambda r: int(r))

self.groups = self.data.groupby("SENT").apply(

lambda s: [(w, t) for w, t in zip(s["WORD"].values.tolist(),

s["TAG"].values.tolist())])

self.sentences = [s for s in self.groups]

def get_paragraph(self, idx=None):

if idx is None:

sent = self.data[self.data['SENT']==self.n_sent].copy().WORD

else:

sent = self.data[self.data['SENT']==idx].copy().WORD

return " ".join(sent.values)

token2sent = Tokens2Sent(data_df)

print(token2sent.get_paragraph(59))

This position is open as of 8 4 2018 Sr Fullstack Engineer Ruby on Rails Conveniently located in Capitol Hill in Seattle we are a fast growing Seattle based company that offers a software solution that allows industries such as gyms studios and schools to grow their business and develop deeper client relationships We are currently looking for a Senior Software Design Engineer with 7 years software development experience and past success translating UI/UX design wireframes to actual code If you have produced excellent user interfaces for a great application we would love the chance to tell you more about this exciting opportunity …

words = list(set(data_df.WORD.values))

words.append('<EOS>')

n_words = len(words)

print(n_words)

tags = list(set(data_df.TAG.values))

n_tags = len(tags)

print(n_tags)

let's build dictionary that maps between token and their corresponding ids and the tags likewise which we are going to use later for building lookup table in the Embedding layer of our network.

## mapping between words/tags and their unique ids

word2idx = {w: i for i, w in enumerate(words)}

tag2idx = {t: i for i, t in enumerate(tags)}

Since our input will be a sequence of tokens and output will be sequence of tags that of course variable in length from paragraph to another in such case that will be a problem since we need fixed length vector that represents the job description sequence signal.

Consider the following sequences

Software Engineer is required with the following skills html, css, c++. We are hiring experienced android developer

[Software, Engineer, is, required, with, the, following, skills, html, css, c++]

[We, are, hiring, experienced, android, developer, <EOS>, <EOS>, <EOS>, <EOS>, <EOS>]

## keras utils

from keras.preprocessing.sequence import pad_sequences

pad_sequences(sequences, maxlen=None, dtype='int32', padding='pre', truncating='pre', value=0.0)

https://keras.io/preprocessing/sequence/#pad_sequences

## Pytorch utils

from torch.nn.utils.rnn import pad_sequence

pad_sequence(sequences, batch_first=False, padding_value=0)

# https://pytorch.org/docs/stable/nn.html#torch.nn.utils.rnn.pad_sequence

We solved the problem of variable length by using special token

Another problem might show up if we are going to use sequence to sequence modeling is the high variance between too small sentences and too long sentence since

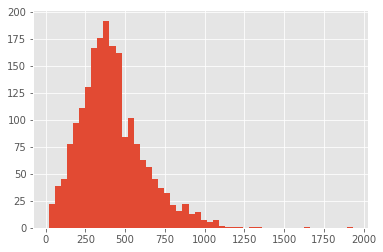

so let’s do some statistics and print histogram to see how we are going to solve such a problem.

from the previous histogram, it is clear to me from the histogram the average length of the sequences between 75-100 without loosing to much information so in order to solve such an issue we will use sequence of maximum 120-150 tokens.

Now Let’s write some python code that prepare the data with the appropriate format, we are going to split our data into test and train chunks too and we are going to use test set for testing our model later.

## input parameters

SEQ_MAX_LEN = 150

INP_MAX_LEN = n_words

OUT_MAX_LEN = n_tags

## variables definitons

X = np.array([[word2idx[w[0]] for w in s] for s in token2sent.sentences])

Y = [[tag2idx[w[1]] for w in s] for s in token2sent.sentences]

print(X[59]); print(Y[59])

## data preprocesssing and sequences padding

X = pad_sequences(maxlen=SEQ_MAX_LEN, sequences=X, padding="post", value=n_words-1)

Y = pad_sequences(maxlen=SEQ_MAX_LEN, sequences=Y, padding="post", value=tag2idx["O"])

Y = np.array([to_categorical(i, num_classes=n_tags) for i in Y])

## splitter for evaluation

X_train, X_test, y_train, y_test = train_test_split(X, Y, test_size=0.20)

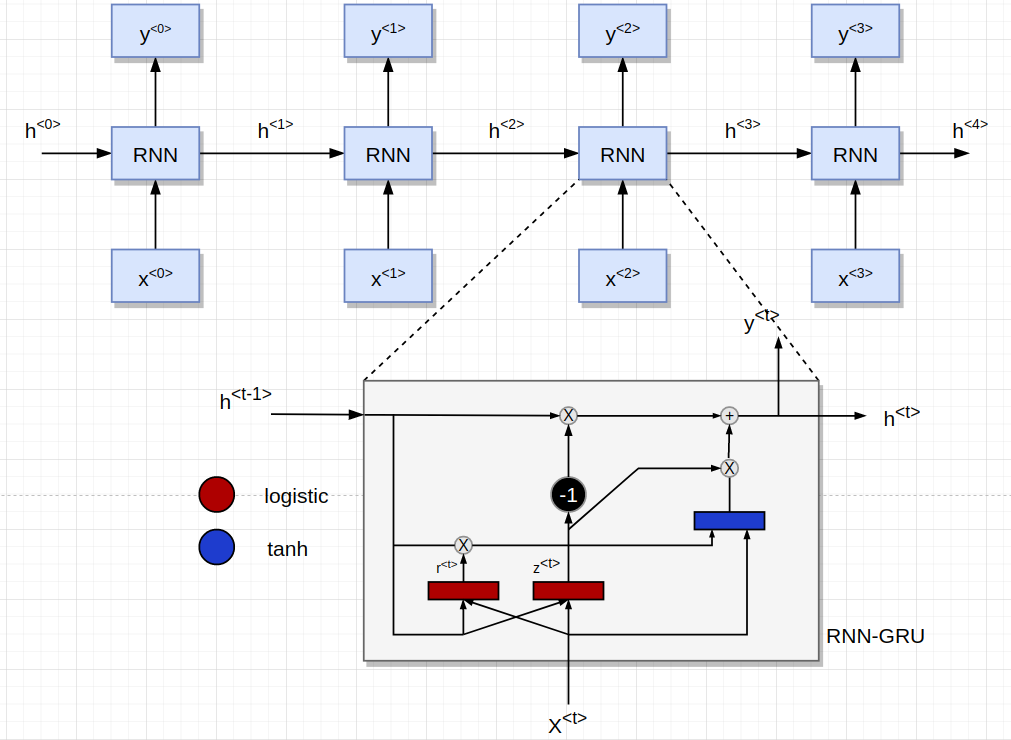

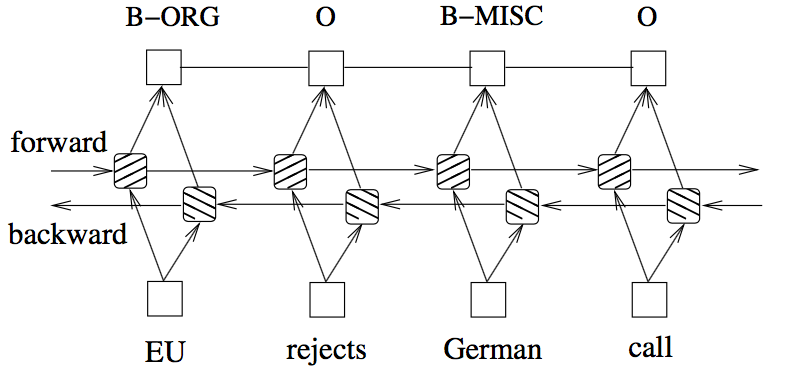

Now it is time for the actual network model and this section you probably want to know what is the model architecture looks like and how RNN is going to use sequential data and do some predictions accordingly.

inp = Input(shape=(SEQ_MAX_LEN, ))

model = Embedding(input_dim=INP_MAX_LEN, output_dim=20, input_length=SEQ_MAX_LEN, mask_zero=True)(inp)

model = Dropout(0.1)(model)

model = Bidirectional(LSTM(units=64, return_sequences=True, recurrent_dropout=0.1))(model)

out = TimeDistributed(Dense(OUT_MAX_LEN, activation="relu"))(model)

crf = CRF(n_tags) # CRF layer

out = crf(model) # output

model = Model(inp, out)

model.compile(optimizer='rmsprop', loss=crf.loss_function, metrics=[crf.accuracy])

model.fit(X, Y, batch_size=32, epochs=5, validation_split=0.1)

Train on 1800 samples, validate on 201 samples

Epoch 1/5

1800/1800 [==============================] - 63s 35ms/step - loss: 0.1959 - acc: 0.8950 - val_loss: 0.0934 - val_acc: 0.9766

Epoch 2/5

1800/1800 [==============================] - 63s 35ms/step - loss: 0.0751 - acc: 0.9789 - val_loss: 0.0761 - val_acc: 0.9777

Epoch 3/5

1800/1800 [==============================] - 60s 33ms/step - loss: 0.0544 - acc: 0.9808 - val_loss: 0.0516 - val_acc: 0.9804

Epoch 4/5

1800/1800 [==============================] - 57s 32ms/step - loss: 0.0364 - acc: 0.9856 - val_loss: 0.0355 - val_acc: 0.9869

Epoch 5/5

1800/1800 [==============================] - 62s 34ms/step - loss: 0.0275 - acc: 0.9893 - val_loss: 0.0378 - val_acc: 0.9872

<keras.callbacks.History at 0x7f67504aae80>

Conditional Random Fields (CRF) Layer

CRF is used often when there is dependency between the inputs. It has been proved that combining LSTM network and a CRF network can produce higher accuracy in tasks like sequence tagging (labeling) since the label of previous inputs can be used to affect the predictions of the current input. It seems in Bidirectional LSTM (BI-LSTM) we can efficiently use past and future tags to predict the current tag for better and more reasonable predictions.

let’s elaborate it by example, if we have the following sequence

we are hiring frontend developer with the following skills UI/UX Design, Angular, VueJS and Graphic Design.

so the sequence frontend developer can affect the predictions of the other tags like Angular, Graphic Design since they are correlated and dependent on each others (appears in the same context frequently).

Now let’s see the model learning history plot

hist = model.history.history

hist = pd.DataFrame(hist)

plt.style.use("ggplot")

plt.figure(figsize=(12,12))

plt.plot(hist["acc"])

plt.plot(hist["val_acc"])

plt.show()

Time for making some prediction on what the network learned to do which is predict sequence of tags.

i = 59

pred = model.predict(np.array([X[i]]))

pred = np.argmax(pred, axis=-1)

true = np.argmax(Y[i], axis=-1)

print("|{:25} |{:15} |{:15}|".format("Word", "True", "Pred"))

print('=='*30)

for w, t, pred in zip(X[i], true, pred[0]):

if w != 0:

print("|{:25} |{:15} |{:15}|".format(words[w], tags[t], tags[pred]))

Prediction Result

| Word | True | Pred |

|---|---|---|

| Software | B-TECH | O |

| Design | I-TECH | O |

| Engineer | O | O |

| with | O | O |

| 7 | O | O |

| years | O | O |

| software | B-TECH | O |

| development | I-TECH | O |

| experience | O | O |

| and | O | O |

| past | O | O |

| success | O | O |

| translating | O | O |

| UI/UX | B-TECH | B-TECH |

| design | I-TECH | I-TECH |

| wireframes | O | O |

| to | O | O |

| actual | O | O |

| code | O | O |

| If | O | O |

| you | O | O |

| have | O | O |

| produced | O | O |

| excellent | O | O |

| user | O | O |

| interfaces | O | O |

| the | O | O |

| art | O | O |

| of | O | O |

| programming | O | O |

| 2 | O | O |

| Responsible | O | O |

| for | O | O |

| the | O | O |

| translation | O | O |

| of | O | O |

| the | O | O |

| UI/UX | B-TECH | B-TECH |

| design | I-TECH | I-TECH |

| wireframes | O | O |

| to | O | O |

| actual | O | O |

| code | O | O |

| that | O | O |

| Ensure | O | O |

| the | O | O |

| technical | O | O |

| feasibility | O | O |

| of | O | O |

| UI/UX | B-TECH | B-TECH |

| designs | I-TECH | O |

| 8 | O | O |

| Assure | O | O |

| that | O | O |

| all | O | O |

| user | O | O |

| input | O | O |

| is | O | O |

| validated | O | O |

| before | O | O |

| submitting | O | O |

| to | O | O |

| back | O | O |

| end | O | O |

| What | O | O |

| You | O | O |

| Need | O | O |

| for | O | O |

| this | O | O |

| Position | O | O |

| 7 | O | O |

| years | O | O |

| of | O | O |

| software | B-TECH | O |

| development | I-TECH | O |

| experience | O | O |

| Proficient | O | O |

| in | O | O |

| Ruby | B-TECH | B-TECH |

| on | I-TECH | O |

| Rails | I-TECH | B-TECH |

| AngularJS | B-TECH | B-TECH |

| React | B-TECH | B-TECH |

| Proficient | O | O |

| in | O | O |

| HTML | B-TECH | B-TECH |

| CSS | B-TECH | B-TECH |

| Understanding | O | O |

| of | O | O |

| server | O | O |

| side | O | O |

| CSS | B-TECH | B-TECH |

| platforms | O | O |

| LESS | O | O |

| SASS | B-TECH | B-TECH |

| Good | O | O |

| understanding | O | O |

| of | O | O |

| AJAX | B-TECH | B-TECH |

| JSON | B-TECH | B-TECH |

| • | O | O |

| Nice | O | O |

| to | O | O |

| haves | O | O |

| Experience | O | O |

| with | O | O |

| AWS | B-TECH | B-TECH |

| Experience | O | O |

| with | O | O |

| Docker | B-TECH | B-TECH |

| or | O | O |

| other | O | O |

| container | O | O |

| based | O | O |

| platforms | O | O |

| apply | O | O |

| today | O | O |

| Required | O | O |

| Skills | O | O |

| Ruby | B-TECH | B-TECH |

| On | I-TECH | O |

| Rails | I-TECH | B-TECH |

| AngularJS | B-TECH | B-TECH |

| React | B-TECH | B-TECH |

| HTML | B-TECH | B-TECH |

| CSS | B-TECH | B-TECH |

| AJAX | B-TECH | B-TECH |

| JSON | B-TECH | B-TECH |

| LESS | O | B-TECH |

| SASS | B-TECH | B-TECH |

| AWS | B-TECH | B-TECH |

| Docker | B-TECH | B-TECH |

| UI/UX | B-TECH | B-TECH |

| JQuery | B-TECH | B-TECH |

| If | O | O |

| you | O | O |

| are | O | O |

| a | O | O |

| good | O | O |

| fit | O | O |

| for | O | O |

| the | O | O |

| Sr | O | O |

| Full | O | O |

| Stack | O | O |

| Engineer | O | O |

| Ruby | B-TECH | B-TECH |

| on | I-TECH | O |

| Rails | I-TECH | O |

| 100 | O | O |

| WORK | O | O |

| FROM | O | O |

| HOME | O | O |

| position | O | O |

| and | O | O |

| have | O | O |

| a | O | O |

| background | O | O |

| that | O | O |

| includes | O | O |

| Ruby | B-TECH | B-TECH |

| On | I-TECH | O |

| Rails | I-TECH | B-TECH |

| AngularJS | B-TECH | B-TECH |

| React | B-TECH | B-TECH |

| HTML | B-TECH | B-TECH |

| CSS | B-TECH | B-TECH |

| AJAX | B-TECH | B-TECH |

| JSON | B-TECH | B-TECH |

| LESS | O | B-TECH |

| SASS | B-TECH | B-TECH |

| AWS | B-TECH | B-TECH |

| Docker | B-TECH | B-TECH |

| UI/UX | B-TECH | B-TECH |

| JQuery | B-TECH | B-TECH |

| and | O | O |

| you | O | O |

| are | O | O |

| interested | O | O |

| in | O | O |

| form | O | O |

| upon | O | O |

| hire | O | O |

| Ruby | B-TECH | B-TECH |

| On | I-TECH | B-TECH |

| Rails | I-TECH | B-TECH |

| AngularJS | B-TECH | B-TECH |

| React | B-TECH | B-TECH |

| HTML | B-TECH | B-TECH |

| CSS | B-TECH | B-TECH |

| AJAX | B-TECH | B-TECH |

| JSON | B-TECH | B-TECH |

| LESS | O | B-TECH |

| SASS | B-TECH | B-TECH |

| AWS | B-TECH | B-TECH |

| Docker | B-TECH | B-TECH |

| UI/UX | B-TECH | B-TECH |

| JQuery | B-TECH | B-TECH |

Now it is time to serialize the model for later usage.

### save the computation results

model.save_weights('../models/lstm_tagger.pickle')

model.save_weights('../models/lstm_tagger.h5')

model.save('../models/lstm_tagger_model.model')

with open('../models/tagger_vocab.pickle', 'wb') as f:

pickle.dump(word2idx, f)

with open('../models/tagger_tags.pickle', 'wb') as f:

pickle.dump(tag2idx, f)

I built web services using Flask that is consumed via some web interface

Additional Resources

- Colah Blog Post about LSTM

- Sequence to sequence in Machine Translation

- Statistical Machine Translation

- Encoder-Decoder architecture for machine translation

- Survey on NER using Deep Learning

- Sequence to Sequence learning using Neural Network

- Bidirectional LSTM CRF for sequence tagging