Arabic Translation System: Seq2Seq Statistical learning

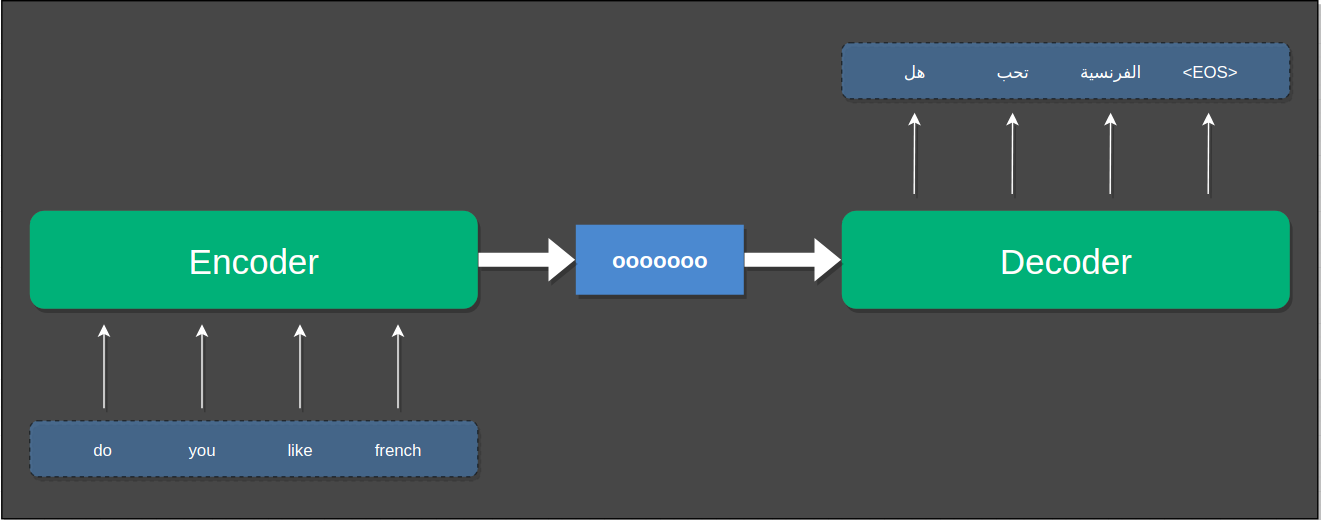

In this post we discuss sequence to sequence model for arabic machine translation.

Introduction

Deep Neural Networks (DNNs) is very powerful machine learning models and it had been used in many natural language processing and computer vision tasks and achieved great performance.

We can see how awesome this models is being injected into Alexa which can literally read a web pages for you or google home when it responds to your commands and absolutely we won’t forget facebook’s face recognition and snapchat ability to manipulate faces. These Products actually built with the power of Neural Networks.

Neural Networks have many architectures with different use cases that can be applied on different problems for example Convolution Neural Network (CNN) which is very powerful when it comes to visual image recognition and object detection and in voice recognition applications too according to some researches literature.

In previous post of mine i explained the theoretical aspects of how Convolution Neural Network (CNN) might be working in recognizing and classifying sequence of handwritten digits VS. Recurrent Neural Network (RNN) and both of them showed a very good results.

Although Deep Neural Network is very powerful models and could capture complex information when it is trained well on large samples, it has weak points when the input and output is variant in length.

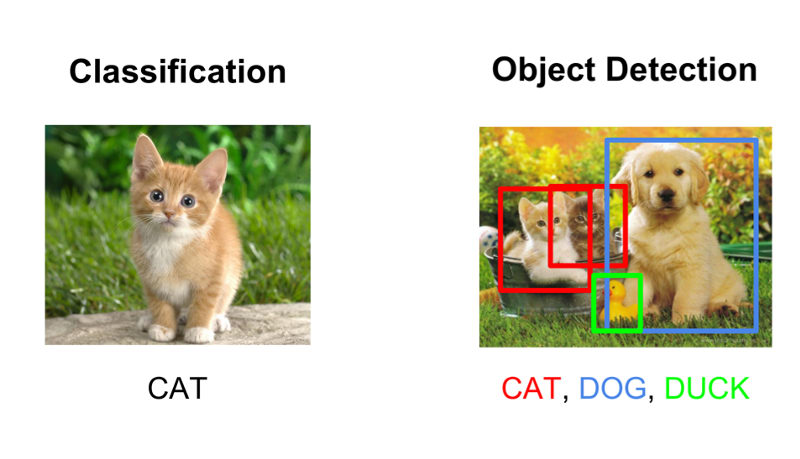

For example when we deal with classification problem for images we have samples of fixed size and if not the case we do multi pre-processing on the images to normalize the training samples then apply multi layer perceptron (MLP) or Convolution (CNN) which will encode the information of the training samples into fixed size vector and make predictions by computing fixed size probabilistic vector.

Classification VS. Object DetectionRNNs are type of neural network that is specialized in handling and processing sequential data and can scale very well on long sequences of values. RNN is very powerful given the fact that it can share weights across the entire model through time-steps iterations.

Example of problems that could be best expressed with sequence to sequence modeling:

- Speech Recognition

- Machine Translation

- Question Answering

- Named Entity Recognition

- POS Tagging

Theory

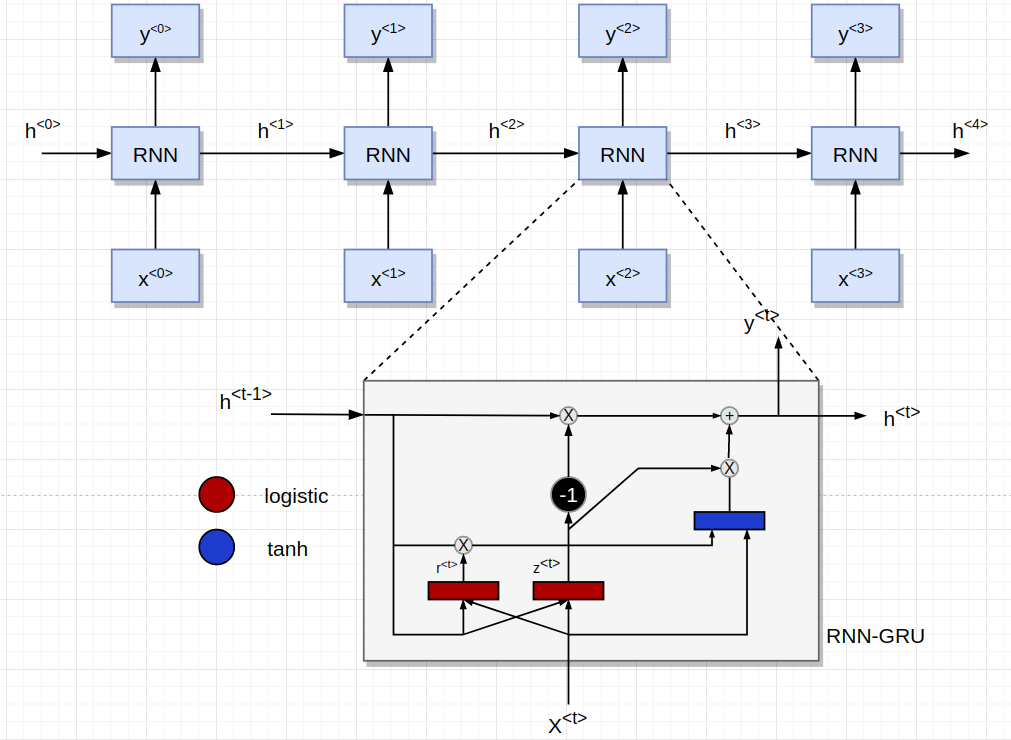

Let’s talk a bit about the theoretical part of RNN and the mechanism behind how it can remember states by constructing mapping between them through steps iterations applied on the input sequence.

Given a sequence of input tokens \begin{equation} (x_{1}, x_{2}, x_{3}, … x_{T}) \end{equation}

The RNN should be able to compute a sequence of output tokens \begin{equation} (y_{1}, y_{2}, y_{3}, … y_{T}) \end{equation}

We have already mentioned that the weights are shared for each time steps and probably you may want to understand the manifest behind these shared parameters and why it is important. For instance assume we have the following sentences we want to translate:

It was raining in Egypt yesterday. In Egypt it was raining yesterday. Yesterday it was raining in Egypt.

if we ask the machine to translate it for us it should produce:

كانت تمطر في مصر البارحة

if one or more of those three sentences were not given in training process the translation should be the same even though the words occurred in different time-steps (different positions). Parameters sharing is great advantage since every input is a function of the previous output in each time-step which generalize the learned language model across different sequences of different forms.

Moreover,

Suppose we train a traditional fully connected feed forward network (FC) on the same problem, a normal network would have separate weights for each individual input token resulting in learning independent language model for each one and in this case it might not generalize (dummy mapping).

Visualization of each step using GRU unitPractice We’ll need a unique index per word to use as the inputs and targets of the networks later. To keep track of all this we will use a helper class called Lang which has word → index (word2index) and index → word (index2word) dictionaries, as well as a count of each word word2count to use to later replace rare words.

The next snippet we are going to build Dictionary data structure to repesent the

language dictionary for both input and output data, then we will use Reader

instance to read and tokenize the data in more organized way.

SOS = 0

EOS = 1

FILES = {'ar': 'ara.txt', 'en': 'eng.txt'}

class Dictionary:

def __init__(self, name):

print('Building Dictionary for lang %s' %(name))

self.name = name

self.word_to_idx = {}

self.idx_to_word = {0: "<S>", 1: "</S>"}

self.words_count = {}

self.n_words = 2 # Count SOS, EOS special tokens

def add_word(self, word):

if word not in self.word_to_idx:

self.word_to_idx[word] = self.n_words

self.words_count[word] = 1

self.idx_to_word[self.n_words] = word

self.n_words += 1

else:

self.words_count[word] += 1

def add_sentence(self, sentence):

for word in sentence.split():

self.add_word(word)

class Reader:

def __init__(self, lang, path, max_len=10, min_len=1, max_chars=40):

self.lang = lang

self.path = path

self.sentences = []

self.max_len = max_len

self.min_len = min_len

self.max_chars = max_chars

def read(self):

print('Reading Sentences for language %s ...' %(self.lang))

with open(self.path, 'r') as reader:

self.sentences += list(map(tools.cleaner_job, reader.readlines()))

return self.sentences

def get_tokenized(self):

print('Tokenizing %s ...' %(self.lang))

return [[w for w in s.split()] for s in self.sentences]

We wrote Reader abstract class we are going to extend it a little bit to include input and output sentence.

To read the data file we will split the file into lines, and then split lines into pairs.

class SentenceReader(Reader):

def __init__(self, input_lang_map, outpt_lang_map, max_length=10):

super(SentenceReader, self).__init__(input_lang_map, outpt_lang_map, max_len=max_length)

in_lang, in_path = tuple(*(input_lang_map.items()))

out_lang, out_path = tuple(*(outpt_lang_map.items()))

self.input = Reader(lang=in_lang, path=in_path, max_len=max_length)

self.outpt = Reader(lang=out_lang, path=out_path, max_len=max_length)

self.lang_map = {in_lang: self.input, out_lang: self.outpt}

self.input.read()

self.outpt.read()

self.to_remove = set()

self.to_have = set()

self.__filter_sentences()

def read_sentences(self, lang):

to_have = self.to_have

lang = self.lang_map.get(lang)

if lang:

return lang.sentences

else:

raise AttributeError("Invalid Language Attribute")

def get_tokenized(self, lang):

print('Tokenizing %s ...' %(lang))

sentences = self.lang_map.get(lang).sentences

return [[w for w in s.split()] for s in sentences]

def __filter_sentences(self):

max_len, min_len, max_chars = self.max_len, self.min_len, self.max_chars

to_have = self.to_have

to_remove = self.to_remove

for reader in [self.input, self.outpt]:

for i, sentence in enumerate(reader.sentences):

if len(sentence.split()) >= max_len:

to_remove.add(i)

elif len(sentence.split()) < min_len:

to_remove.add(i)

if i not in to_remove:

to_have.add(i)

for reader in [self.input, self.outpt]:

to_have = [i for i in to_have if i not in to_remove]

sent_series_obj = pd.Series(reader.sentences)

reader.sentences = sent_series_obj[[i for i in to_have]].tolist()

The next code snippet to write function that accept input language file path and output language path.

def prepare_dataset(in_lang_path, out_lang_path, max_length=10):

print('Preparing Dataset')

reader = SentenceReader({'en': in_lang_path}, {'ar': out_lang_path}, max_length=max_length)

pairs = [(in_sent, out_sent) for (in_sent, out_sent) in zip(reader.read_sentences('en'),

reader.read_sentences('ar'))]

print('Building Language Dictionary')

in_dictionary = Dictionary('english')

ou_dictionary = Dictionary('arabic')

for in_sent, out_sent in pairs:

in_dictionary.add_sentence(in_sent)

ou_dictionary.add_sentence(out_sent)

print('Encoding Tokenized Sentences to unique identifiers')

in_word_to_idx = in_dictionary.word_to_idx

ou_word_to_idx = ou_dictionary.word_to_idx

pairs_encoded = [([in_word_to_idx[in_w] for in_w in in_sent],

[ou_word_to_idx[out_w] for out_w in out_sent])

for (in_sent, out_sent)

in zip(reader.get_tokenized('en'),

reader.get_tokenized('ar'))]

return {

'pairs': pairs,

'pairs_encoded': pairs_encoded,

'in_dictionary': in_dictionary,

'out_dictionary': ou_dictionary,

}

def pad_sequence(indices):

max_len = 0

for seq in indices:

if len(seq) > max_len:

max_len = len(seq)

for seq in indices:

seq += [EOS] * (max_len - len(seq))

def index_to_tensor(pairs):

input_idxs = []

output_idxs = []

tensors = {}

for pair in pairs.copy():

input_idxs += [pair[0] + [EOS]]

output_idxs += [[SOS] + pair[1] + [EOS]]

for indices in [input_idxs, output_idxs]:

pad_sequence(indices)

print(input_idxs[:10])

print(output_idxs[:10])

tensors['input'] = torch.tensor(input_idxs)

tensors['target'] = torch.tensor(output_idxs)

return tensors

data = loader.prepare_dataset('./eng.txt', './ara.txt', max_length=16)

Preparing Dataset

Reading Sentences for language en ...

Reading Sentences for language ar ...

Building Language Dictionary

Building Dictionary for lang english

Building Dictionary for lang arabic

Encoding Tokenized Sentences to unique identifiers

Tokenizing en ...

Tokenizing ar ..

import random

data_text = [random.choice(data.get('pairs')) for i in range(10)]

data_encoded = [random.choice(data.get('pairs_encoded')) for i in range(10)]

for item in data_text:

print(item)

for item in data_encoded:

print(item)

('Im sure Tom will tell us the truth ', 'متأكد بأن توم سيخبرنا بالحقيقة ')

('Watch out Theres a big hole there ', 'انتبه هناك حفرة كبيرة هناك ')

('May I borrow your dictionary ', 'هل لي أن أستعير قاموسك؟ ')

('Im sure Ill find a good gift for Tom ', 'أنا متأكد أني سأجد هدية جيدة لتوم ')

('I dont know if I have the time ', 'لا أعلم إن كان لدي ما يكفي من الوقت ')

('Dont forget these ', 'لا تنس هذه ')

('Michael Jackson died ', 'توفي مايكل جاكسون ')

('Who knows what might happen in the future ', 'من يعلم ما قد يحصل مستقبلًا؟ ')

('I also went ', 'ذهبت أيضاً ')

('May I interrupt ', 'هل لي أن أقاطع؟ ')

([403, 802, 115, 1921], [627, 1722, 4256])

([159, 472, 8], [232, 88, 1441])

([11, 310, 332, 90, 2306], [1563, 1562, 2008])

([235, 55, 67], [77, 775, 18, 108])

([2986, 2987, 115, 1235, 2988], [7294, 3936, 7295])

([317, 3290, 115, 3291, 396, 694], [8257, 8258, 2953])

([1155, 451, 115, 833], [816, 716, 3437])

([19, 3116, 396, 451, 8, 396, 2841], [2630, 1397, 209, 7657])

([19, 559, 124, 804, 1550, 127], [861, 6070, 6071])

([11, 362, 124, 667, 3728, 454], [162, 411, 724, 9740, 9741])

Neural Network Modeling Using Pytorch

HIDDEN_SIZE = 128

INPUT_SIZE = data.get('in_dictionary').n_words

OUTPUT_SIZE = data.get('out_dictionary').n_words

tensors = data.get('pairs_encoded')

dictionaries = {'output': data.get('out_dictionary'),

'input' : data.get('in_dictionary')}

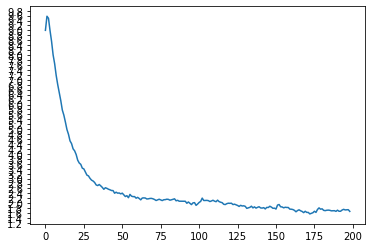

model = Seq2seqModel(iterations=1000000, lr=0.01, hidden_size=HIDDEN_SIZE, dictionaries=dictionaries,

input_size=INPUT_SIZE, output_size=OUTPUT_SIZE, max_length=16)

"""

------ Trainging ------

"""

model.train(tensors=tensors)

"""

------ Evaluating ------

"""

sentences = data.get('pairs')

model.evaluate(sentences)

Input | Shut the door

Output | أغلق الباب

Pred | أغلق الباب </S>

========================================================================

Input | Im afraid Tom doesnt want to talk to you

Output | أخشى أن توم لا يريد التحدث معك

Pred | أخشى أن لا لا </S>

========================================================================

Input | Do you remember the day when you and I first met

Output | هل تذكر اليوم الذي تقابلنا فيه أنا وأنت أول مرة؟

Pred | هل تذكر اليوم الذي تقابلنا فيه اليوم </S>

========================================================================

Input | I dont care about profit

Output | أنا لا أهتم للربح

Pred | لا أهتم أهتم للربح </S>

========================================================================

Input | Tom had to make a decision

Output | توجب على توم أن يتخذ قرارا

Pred | توجب أن توم يتخذ يتخذ قرارا </S>

========================================================================

Input | He tried to absorb as much of the local culture as possible

Output | حاول أن يستوعب أكبر قدر من الثقافة المحلية قدر الإمكان

Pred | حاول أن يستوعب أكبر قدر الثقافة الثقافة المحلية المحلية قدر </S>

========================================================================

Input | In Japan a new school year starts in April

Output | في اليابان السنة الدراسية الجديدة تبدأ في أبريل

Pred | في هناك السنة الدراسية تبدأ في تبدأ </S>

========================================================================

Input | Dont play baseball here

Output | لا تلعب كرة القاعدة هنا

Pred | لا تلعب كرة القاعدة هنا </S>

========================================================================

Input | Whats important is not the goal but the journey

Output | ما هو مهم ليس الهدف ولكن الرحلة

Pred | ليس ما مهم الهدف ولكن الرحلة </S>

========================================================================

Input | I figured youd be impressed

Output | توقعت أنك ستنبهر

Pred | توقعت أن ستنبهر </S>

========================================================================