Recommendation Systems Walkthrough - Build Content Based Recommenders

This Post Discusses different approaches for recommending movies based on the content of the movie and not just the user preferences.

We previously discussed how we built a recommender module based on user preferences, you may want to read about the story here. We used different similar movies to match the user preferences in movie taste.

In this post, we are going to make use of the content available in the movie like

- Movie Title

- Movie Summary

- Movie Description

- Movie Category

- Movie Director / Actor / Casting Team

Content based filtering make recommendations by using items metadata such as genres, title, description actors, director … etc.

So we can say if a user liked a particular item he also may like similar items to it.

A lot of meta metadata about movies can be used to build movie recommendations based on the content. We can make use of the movie title in which we can say that similar movies may have similar titles. Also, the movie description may be informative about how we represent similarities between movies.

What about the Cast team and directors? I would like to watch movies by the same director again.

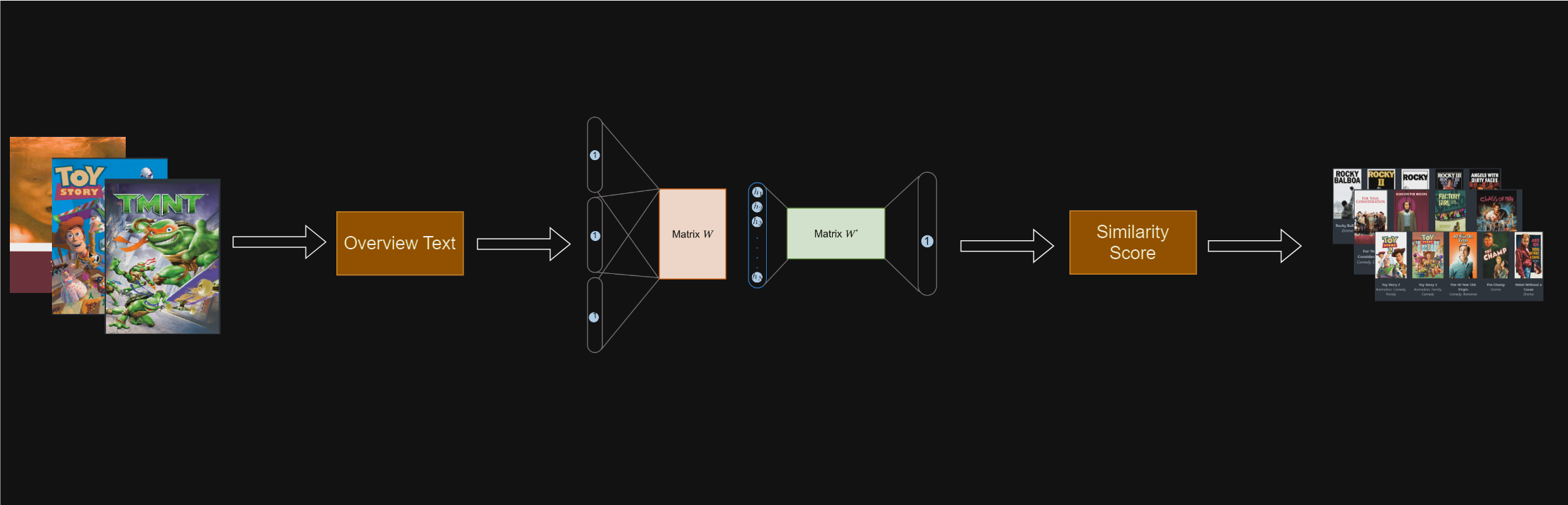

Architecture Overview

Embeddings

Embedding models have revolutionized how language is being used in the NLP era. I am not going to focus on how embeddings models is working here rather than focus on its usability for vectorizing text to use in suggestion engine pipeline.

Why embeddings and not simpler representation like TFIDF ?

Embeddings models in information retrieval systems has many powerful preferences over the traditional TF-IDF method, One of the most reasons is:

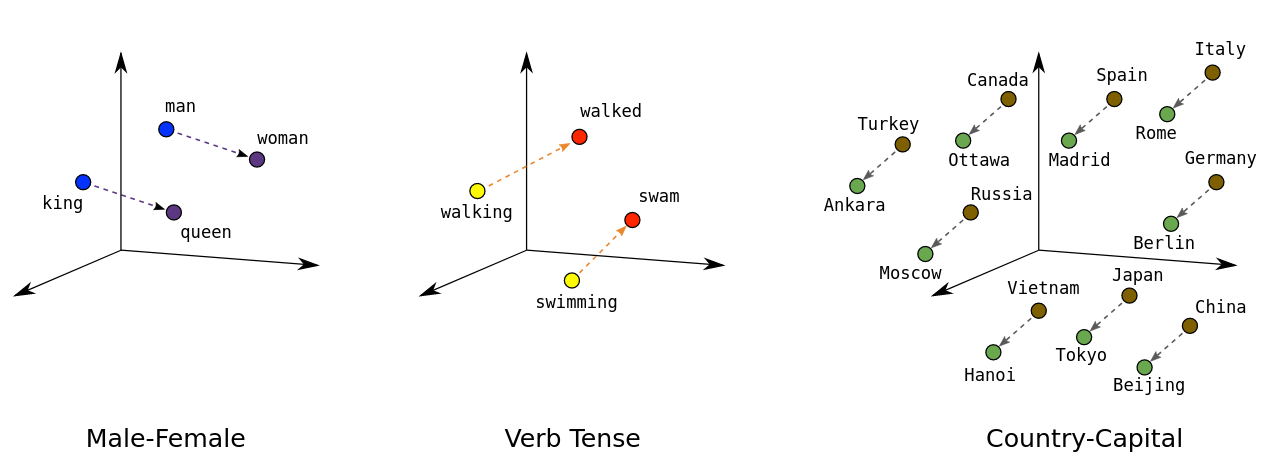

Word2Vec Creates dense vector representations where words with similar meanings are positioned closer together in the vector space. For example, “king” and “queen” will be closer in the vector space, capturing their semantic similarity.

TF-IDF Treats words as independent features and does not capture any semantic relationship between them. It only considers the frequency and distribution of words, so “king” and “queen” would be treated as entirely separate features.

Word2Vec Reduces the dimensionality of the representation by embedding words into a continuous vector space of typically a few hundred dimensions (e.g., 100-300 dimensions). This helps in reducing computational complexity and memory usage.

TF-IDF Results in a sparse matrix where the number of dimensions equals the size of the vocabulary. For large corpora, this can lead to very high-dimensional data, which can be computationally expensive to process.

To read more about embeddings models and how it works behind the hood i will just leave this wonderful articles

https://www.kdnuggets.com/2022/10/deep-learning-nlp-embeddings-models-word2vec-glove-sparse-matrix-tfidf.html?ref=localhost https://colah.github.io/posts/2014-07-NLP-RNNs-Representations/?ref=localhost

Convert Text Documents to a TF-IDF Matrix with tfidfvectorizer - KDnuggetsConvert text documents to vectors using TF-IDF vectorizer for topic extraction, clustering, and classification.KDnuggetsAbid Ali AwanDeep Learning, NLP, and Representations - colah’s blogcolah’s blog

So in summary, We are going to use Word2vec Embeddings model to represent textual content of a movie and feed it to similarity scoring system in order to extract the most similar items.

Since we have a semantic representation of the textual content of the movies metadata, it is expected to have a good scoring system.

I trained Word2vec model using skip-gram architecture on vocabulary of size 544115 token and 10000 movies description from TMDB database using skip-gram GPU.