Real Time Events Detection On Twitter

This Post discusses highlights of Events Detection on twitter using natural language processing.

Table of contents:

- Event Detection

- Research Questions

- Features Engineering

- The challenges of twitter

- Available public data-set

- Clustering events

Event Detection

Definition of events according to McMinn et al. (2013) journal “ACM International Conference on Information and Knowledge Management 2013 Andrew J. McMinn, Yashar Moshfeghi, and Joemon M. Jose”

Definition 1:

An event is a significant thing that happens at some specific time and place (Aggarwal and Subbian).

Definition 2:

Something is significant if it may be discussed in the media. For example, you may read a news article or watch a news report about it.

Definition 3:

News tweet: A tweet that refers to a specific news event, or in any other way is directly related to such an event as described in Definition 1. more specifically it is a group of news tweets discussing the same topic at similar times. Any tweets that are not related to news are considered irrelevant.

Types of events:

- Specified events type for examples: catastrophes, riots, music concerts, news) or just any kind of event.

- Specified time events type we want to detect events that have occurred in the past, in the research literature called (retrospective event detection) or new events as they emerge (new event detection).

- Unspecified event types can be listed under the category of open domain event detection approach due to the definition of event is seems to be more general which may yield more complexity to the task. this type of events is more likely to be addressed under Unsupervised machine learning approaches.

Research Questions

- How can news events be detected in real-time from the Twitter stream?

- How to choose features from tweets and how should we represent them when using an artificial neural network to filter news tweets from non-news tweets?

- How to extract only sub tweets which is not spam ?

- How can we cluster semantically and temporally similar news tweets to form events in real-time?

- How can we identify the important news events for report?

- How to evaluate the system and move on ?

Features Engineering

Feature engineering is one great challenge when working with tweets. The textual content itself is likely to be an important feature for event detection from tweets. It is therefore important come up with a way of representing the text that is able to capture the information necessary for the language modeling to be used for the objective task (event detection, news monitoring tool, tweets classification … etc). On the other hand it is important to keep the dimensionality low enough to feed it to any machine learning algorithm and at the same time keep the computation cost feasible.

Language Modeling

For retrieving information from text, documents are typically transformed into suitable representations using one of many available modeling approaches.

Traditional language models Traditional language modeling is being used for so long time and produced acceptable results in text mining and many machine learning algorithms.

- term vectors or bag-of-words (BOW) which is word count based approach

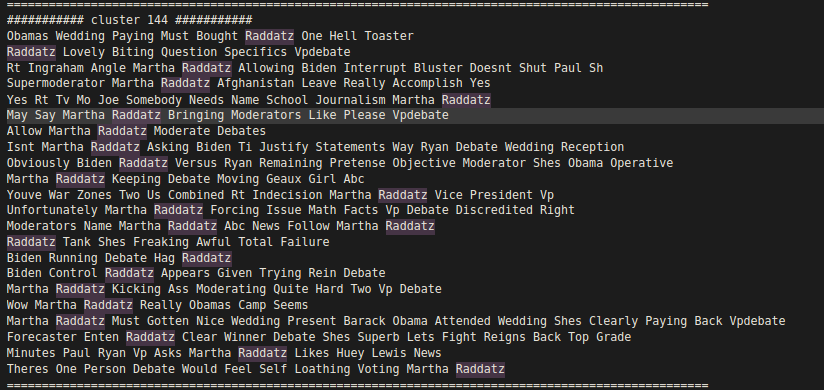

- another approach is term vectors weighted using term frequency - in- verse document frequency (TF-IDF).

IDF-Weighted Word Vectors (IDF2VEC):

Unfortunately this approach is prone to:

- Curse of dimensionality: Size of the tweet vectors is even larger when working with twitter data considering the vast amount of OOV (out of vocabulary)

- Loss of context Another problem with the word count based approach is the context of words is not taken into consideration.

- Sparse Vectors As tweets are limited to 140 characters and contains 10.7 words on average, while the English language contains over one million words, it is obvious that representing them as a simple term vectors will be unfeasible due to the enormous sparsity (contains many zeros). Similarity between two such vectors would simply never be accurate because the term overlap would be too small (too many zeros).

Fortunately and Unfortunately:

Some alternative data representations, found in the TDT (Topic Detection Tracking) literature, are the NER (Named Entity) Vector Kumaran and Allan, 2004. [https://www.semanticscholar.org/paper/Text-classification-and-named-entities-for-new-Kumaran-Allan/0229c0eb39efed90db6691469daf0bb7244cf649] and the mixed vector (term + named entities) Yang et al, 2002. [https://dl.acm.org/citation.cfm?id=1220591]

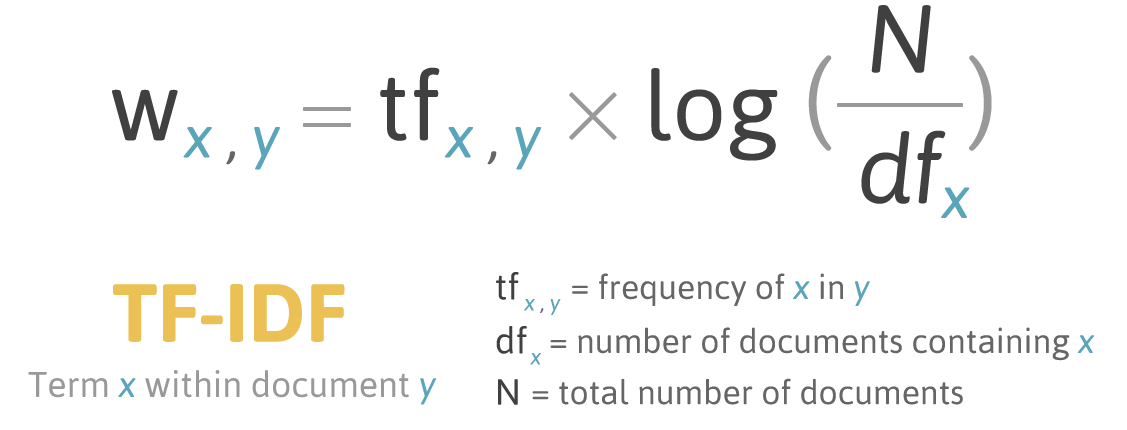

Neural language models

When dealing with Twitter short texts, we need a representation that is less Noisy than in the traditional IR systems. In this case word embeddings come to the party to solve such a problem efficiently. word embeddings have revolutionized NLP and is being used in many applications due to the fact we have now much vector representation of the words than the traditional approaches.

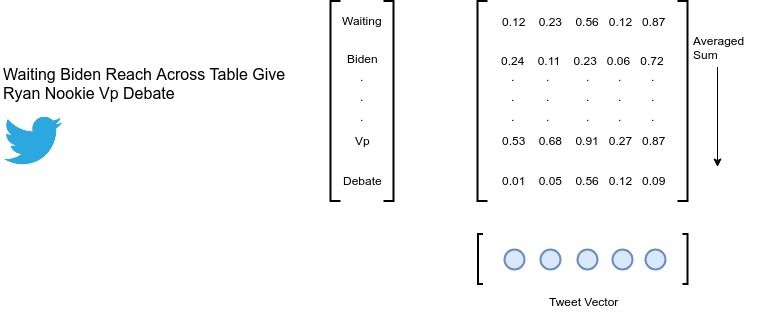

Average Word2Vec (AvgW2V):

Based on Word2Vec approach we use large corpus of tweets (the same domain preferred) to train embeddings model that can encode tweets features contextually. to represent each tweet. each term in the tweets is vectorized to its corresponding vector according to the learned embeddings weights then averaged to produce representative tweet vector.

Paragraph Vectors (Doc2Vec):

Thanks to Mikolov et al. NLP era has new technique for representing large documents instead of individual tokens. It is indeed generalization of the Word2Vec method in which relationship between documents is made sometimes we call it paragraph embeddings, So in our task i think we should call it tweets embeddings.

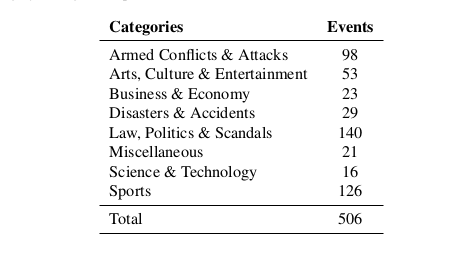

Datasets

McMinn et al. (2013) address the lack of publicly available corpora for evaluation of event detection approaches in Twitter. He created the corpus by downloading a collection of 120 million English tweets using the Twitter Streaming API for 28 days, starting on 10 October 2012.

McMinn: Building a large-scale corpus for evaluating event detection on twitter Publicly available here ! [https://docs.google.com/forms/d/1o-sv3E4IyMy3U_3i9HMR_ZeKgVyF-zOP-bp7JucIy6c/viewform]

Clustering Events

In my implementation i followed TwitterNews Monitoring tool Architecture, The paper is referenced in the References section, We don’t have prior knowledge about the number of events we are going to collect since the data is pretty large following the fact that it is downloaded from Twitter (over one billion tweets) every day. In this case many statistical algorithms like (K-Means, DB-Scan, PCA, TSNE … etc) techniques may not be scaled solution.

What about Clustering by Inverted Index ?

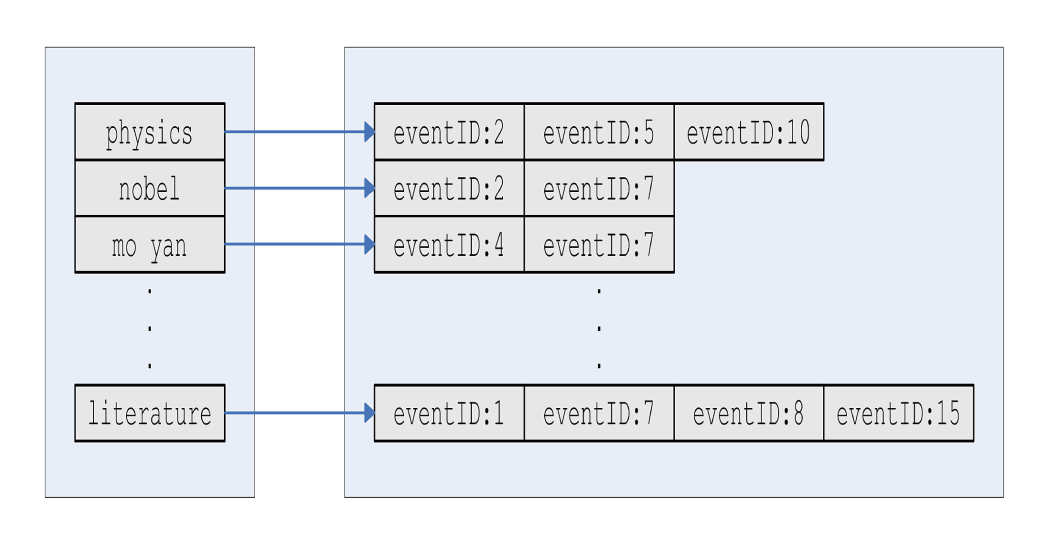

Term Tweets Inverted Index:

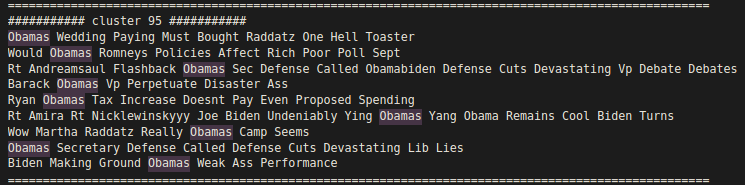

Twitter News M. Hasan et al.term tweets inverted index is constructed and stored to map between tokens and its occurrences in every tweet. the inverted index of Finite set K x Q for sake of memory optimization. so every time we get new tweet from stream API the oldest one is replaced with the latest.

Previously, for each input tweet d the top k Tf-Idf weighted terms are selected to search for tweets that have those terms. Then, each tweets is compared with the input tweet d using cosine similarity to determine the tweet novelty based on threshold we determine if this tweet unique or not.

If the tweets is determined not unique then it should be sent to the cluster Module in which term events inverted index should be constructed accordingly !

let’s elaborate this idea with an example, let us consider the input tweet “Mo Yan wins Nobel in Literature”, where the top three tf − idf weighted terms are [mo yan, nobel , literature].

Each of the terms is searched in the term-tweets inverted index and the tweets with IDs = [3, 5, 7, 15, 18, 21, 25] are retrieved (Check the term tweets Figure). Event Tweets Inverted Index: Twitter News M. Hasan et al.Now since we have table that map between tweets most trending tokens and its tweets ids we have another relation between token and its events ids. The term tweets inverted index table represent the tokens and its corresponding clusters.

To elaborate this idea with the same example used in the Search Module, let us consider that, the input tweet “Mo Yan wins Nobel in Literature” is decided as “not unique” and sent to the EventCluster Module. The top three tf − idf weighted terms of the tweet are “mo yan”, “nobel ”, and “literature”. Each of the terms is searched in the term-eventIDs inverted index and the event clusters with IDs = [1, 2, 4, 7, 8, 15] are retrieved. the input tweet vector is compared with the centroid of each of the retrieved event clusters and assigned to the cluster with the highest cosine similarity If the cosine similarity is below a certain threshold a new cluster is created and the tweet is added to the newly created cluster.

Note that the total number of event clusters with which the input tweet is compared will always be within K × E. sub clusters events may be created over time in which certainly took place due to Topic Drift. According to the author this problem can be fixed later using Defragmentation techniques by merging the similar clusters together based on some heuristic.

References:

- McMinn, A.J., Moshfeghi, Y., Jose, J.M.: Building a large-scale corpus for evaluating event detection on Twitter.

- TwitterNews+: A Framework for Real Time Event Detection from the Twitter Data Stream

- Real-Time Novel Event Detection from Social Media.

- Ruder Blog Posts, Comprehensive summary on word embeddings.